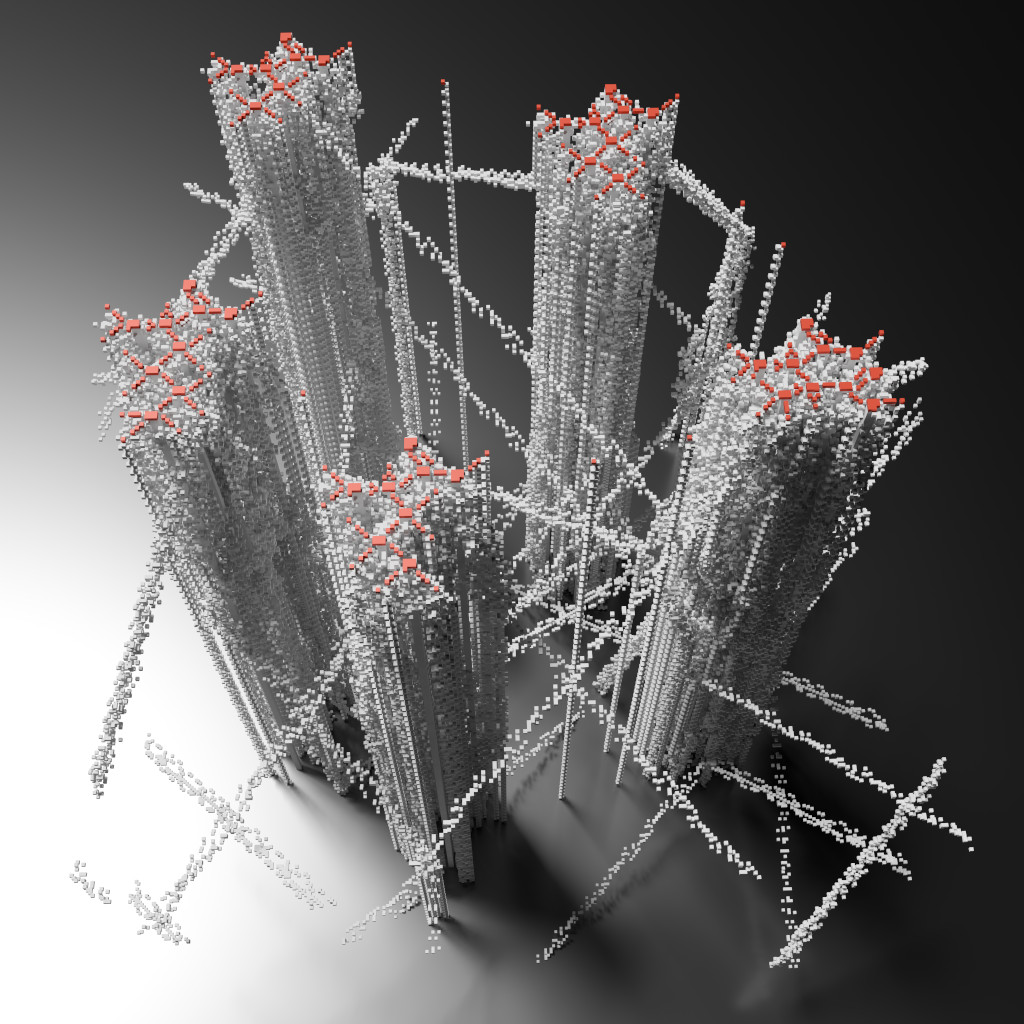

It’s with sadness that I witness how we reached the end of the Droste.

“ドロステのはてで僕ら” (Dorosute no hate de bokura), which literally means “We at the end of the Droste”, was a great movie you should check out if you were into recursion.

Droste, other than being the household name for the Droste Effect, was also a kick-ass cocoa, always in my pantry, in every house I lived in. The company got gobbled up by a larger one that kept the brand name, continued production of some products, but seemingly canned the cocoa. Sadness!